Model shows promise in differentiating from hypertrophic cardiomyopathy and other conditions

Researchers at Cleveland Clinic have developed a deep learning model that can help differentiate cardiac amyloidosis from other etiologies of suspected cardiomyopathy on cardiac magnetic resonance imaging (CMR) when the diagnosis isn’t clear. They describe the model and its validation in a new research article published in JACC: Cardiovascular Imaging.

Advertisement

Cleveland Clinic is a non-profit academic medical center. Advertising on our site helps support our mission. We do not endorse non-Cleveland Clinic products or services. Policy

The model’s algorithm could potentially be integrated into a clinical decision support tool to help improve diagnostic accuracy, particularly at centers with less experience in reading CMR. Studies have reported a median delay of 2.6 to 3.4 years from the first symptoms of cardiac amyloidosis to diagnosis, with about one-third to one-half of patients being misdiagnosed, most often as having hypertrophic cardiomyopathy (HCM). The two conditions can appear similarly as thickened heart muscle on CMR.

The investigational model could help reduce both treatment delay and misdiagnosis, says lead investigator Deborah Kwon, MD, Director of Cardiac MRI at Cleveland Clinic.

“The most common reason patients are referred for CMR is to evaluate suspected cardiomyopathy,” Dr. Kwon explains. “There are pathognomonic tissue features of either cardiac amyloidosis or HCM that readily distinguish between these two entities, particularly when the diseases are more advanced. However, patients can demonstrate nondistinct findings that just don’t fit the textbook imaging features. As a result, there’s uncertainty about the diagnosis.”

“This study is an important step in the right direction, given that these two disease phenotypes overlap with respect to clinical characteristics and CMR findings, yet they have completely different treatments and prognosis,” adds co-author Mazen Hanna, MD, a cardiologist and Co-Director of Cleveland Clinic’s Amyloidosis Center.

Diagnostic uncertainty is greatest early in the disease process. Given the increased awareness of cardiac amyloidosis, patients suspected of having the condition are referred for CMR sooner than in the past. At the same time, disease-modifying therapies have recently become available. “Previously, being given a diagnosis of cardiac amyloidosis was associated with a grim prognosis,” Dr. Kwon says. “But it is exciting that now there are a growing number of therapeutic options for these patients. Many studies have been showing that the earlier you diagnose these patients, the better their outcomes.”

Advertisement

To address the diagnostic uncertainty problem, Dr. Kwon — along with data scientist David Chen, PhD, and colleagues — used a relatively new type of deep learning model called a vision transformer (ViT), which has been shown to outperform the older convolutional neural networks in accuracy and computational efficiency.

The data science team, led by Dr. Chen, developed the model first using a retrospective analysis of data from 807 patients who had been referred for CMR at Cleveland Clinic in Ohio for suspicion of infiltrative disease or HCM. The subsequent confirmed diagnoses (via serum light chains, technetium-99 pyrophosphate scans or biopsies) were cardiac amyloidosis in 252, HCM in 290 and cardiomyopathy other than amyloid/HCM (“other”) in 265. Those patients were split 70/30 into training and test groups.

Another group of 157 patients from Cleveland Clinic Florida were used for external validation. Of those, 51 had cardiac amyloidosis.

The ViT model was trained to identify cardiac amyloidosis using thousands of CMR images from the patients with definitive diagnoses so that it “learns” what to look for. “CMR is very labor-intensive, with a thousand images to go through to arrive at the final diagnosis,” Dr. Kwon explains. “Whereas it typically takes 30 to 40 minutes for someone to review a CMR image and come to a final diagnosis, this inference tool takes roughly 17 seconds.”

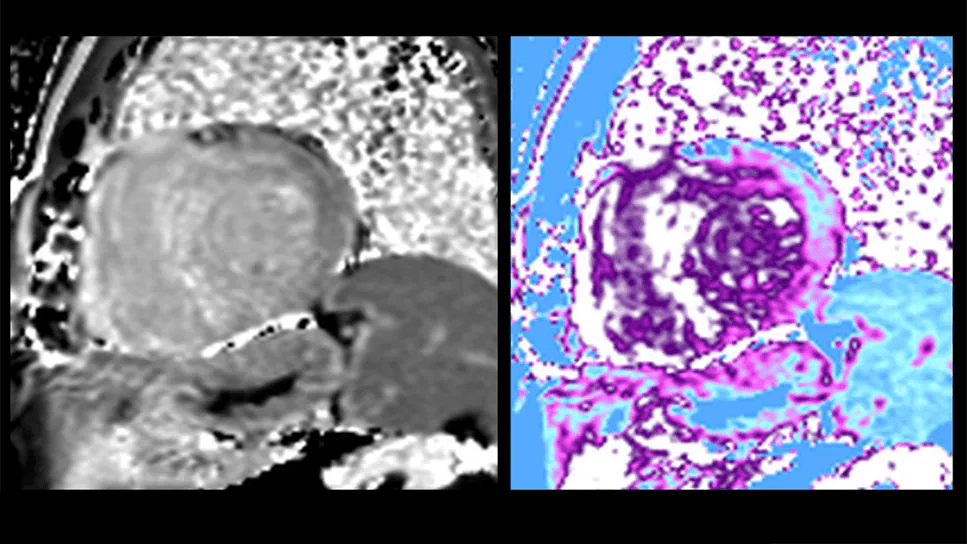

Image content: This image is available to view online.

View image online (https://assets.clevelandclinic.org/transform/34d7546e-4618-4fe9-937a-1db59a767b36/cardiac-magnetic-resonance-imaging-inset)

In the internal testing dataset (from Ohio), diagnostic accuracy for cardiac amyloidosis with the ViT model was 84.1%, with an area under the curve of 0.954. In the external testing set (from Florida), those values were 82.8% and 0.957, respectively.

Advertisement

The physician’s level of confidence about the diagnosis made a difference. When the clinical reports reflected a moderate to high degree of physician confidence, accuracy of the ViT model was 90%, compared with 61.1% for studies with reported uncertain, missing or incorrect diagnoses of cardiac amyloidosis. In the latter cases, accuracy improved to 79.1% when studies with poor image quality, dual pathologies or ambiguity of clinically significant cardiac amyloidosis were removed.

Discriminative accuracy was similar between MRI scanners manufactured by Phillips (83.9%) and Siemens (86.2%).

Patients who had been incorrectly classified by the algorithm had lower left ventricular diagnostic mass (141 g vs. 163 g; P = .048). However, there were no imaging features that significantly differentiated patients who were correctly classified with HCM by the ViT model versus those who were not.

Interestingly, the ViT model exceeded clinician performance in the Ohio group but not the Florida group, where the human readers were 15% more accurate than in the Ohio cohort. Dr. Kwon says this difference is likely because the Florida patients more often had advanced cardiac amyloidosis at the time of presentation, thereby displaying more of the classic CMR features, yielding higher diagnostic confidence and improved diagnostic accuracy.

“This study illustrates how important it is to carefully consider how an AI tool should be implemented in the context of the referral base, the types of patients who are presenting and the doctors who are reading the studies,” Dr. Kwon says. She adds that this tool would be best limited to situations of uncertainty, given the 84.7% accuracy in cases when the physician was highly confident in the Florida cohort, where the disease presentation was more advanced.

Advertisement

“When the doctor was confident, it could have changed the right answer to the wrong answer for 8% to 15% of patients,” Dr. Kwon says. “I'm concerned about that, because we shouldn’t be changing right diagnoses to wrong diagnoses. My recommendation is that if the physician is confident, they should just go with their non-AI-assisted diagnostic assessment. However, if they are uncertain, this AI-tool can provide an extra layer of diagnostic guidance.”

Dr. Hanna adds that the stakes for providing that additional level of diagnostic certainty are high. “The importance of making the correct diagnosis is paramount,” he says, “given that both HCM and cardiac amyloidosis have specific disease-modifying treatments.”

Dr. Kwon’s planned next step will explore this implementation strategy in a scientific fashion. She will ask expert colleagues to review the test cohort of patients from this study. She plans to study how the AI tool impacts their confidence level about their original diagnosis, prior to exposure to the AI tool.

She credits Cleveland Clinic’s distinctive multidisciplinary team with making this effort possible. In particular, she cites her co-senior author Dr. Chen, who developed the AI methodology. “Having an on-site expert multidisciplinary team is critical,” Dr. Kwon notes. “Developing AI algorithms on site enables a deeper understanding of the strengths and weaknesses of AI tools, as well as the ability to customize their deployment. Unless a physician has a data science background, there’s no way they can develop and implement this on their own.”

Advertisement

She adds that technology is not yet at a stage for a “plug and play” approach with AI clinical decision-support tools. “Much work is still needed to determine the best methodology for clinical implementation, and this requires close collaboration with data science and clinical experts,” she concludes. “This is an exciting time to be in this space. The rapid developments emerging from many different centers will be transforming how we diagnose and care for our patients.”

Advertisement

Early results show patients experiencing deep and complete response

Multi-specialty coordination essential for improving quality of life

On-demand stem cell mobilizer is an effective salvage strategy

Aim is for use with clinician oversight to make screening safer and more efficient

Multimodal evaluations reveal more anatomic details to inform treatment

Automating routine medical coding tasks removes unnecessary barriers

End-of-treatment VALOR-HCM analyses reassure on use in women, suggest disease-modifying potential

A closer look at the impact on procedures and patient outcomes